With the release last month of sqlchain 0.2.10 it's overdue I actually write up some usage info on the two new features.

A new config option max_blks allows setting a limit to the number of blocks kept in the sqlchain database. After each block is added this value is checked and if the total block count exceeds max_blks then previous blocks are deleted (including trxs and outputs rows). There are some caveats to this behaviour. Unreferenced addresses are not removed, block header data (quite small) is not deleted, and blob data (signatures if present, and tx input references) are not reclaimed. The latter could probably be cleaned up without too much trouble but for now I have left it because it's not critical for typical uses.

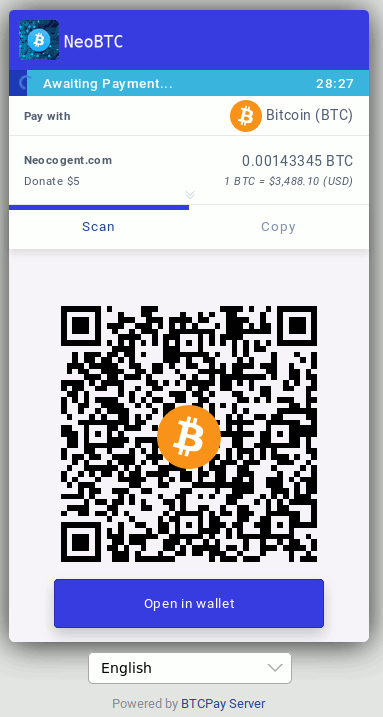

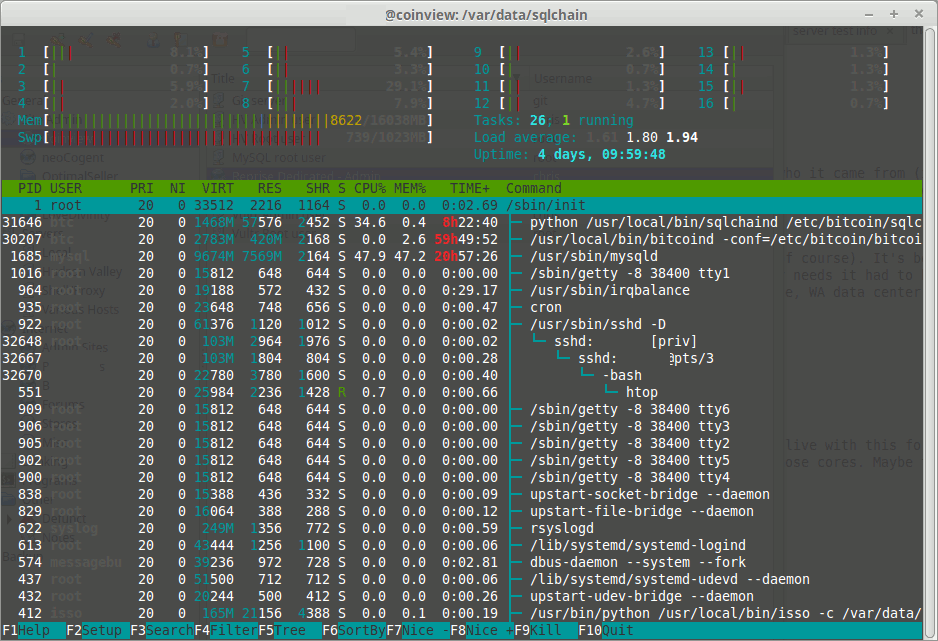

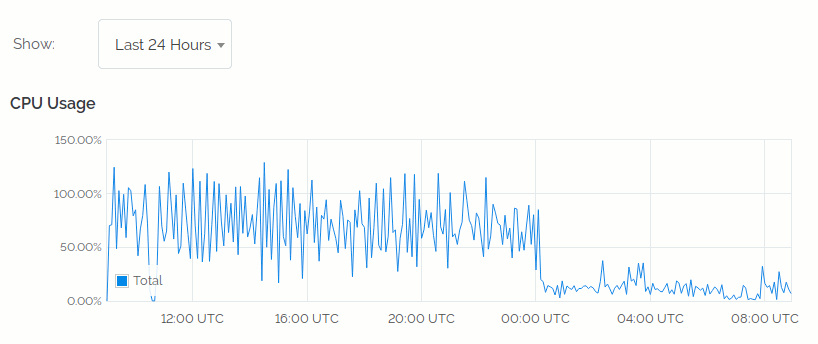

The main purpose of this is to allow "quick sync" for use cases that only require ongoing tx data processing. So if you want to monitor tx flow or manage a new wallet on top of sqlchain (eg. pmt processing) then you can now run a pruning node, set a max_blks covering a few days, and then process txs without having to do a full sqlchain sync. To avoid a full sync you should set the conf option block to some recent value and sync will start from there instead of the default 0. In this case only address and blob data for seen blocks will exist and the total db size will be small but increase slowly as addresses get added. Other uses might be analyzing the mempool for fee data or producing daily tx statistics.

The other new config option is apis which tells the sqlchain-api daemon which api modules to load and dispatch requests on. The default apis when not present is to only load the "Insight" api. So upgrading to 0.2.10 will cause apis to vanish and require adding an apis value to fix. The setting is a list of lists or triplets. Each list entry being info for how to handle an api consisting of [ url_path, module_name, entry_function ]. For example, you could copy and customize the insight.py module to a new name my_api.py and make an entry like:

apis=[["/api", "sqlchain.my_api", "do_API"]]

Now when sqlchain-api starts it would only load your custom api and process according to your specific needs. I have used this recently for a client's custom address watching web app. You can load multiple apis like the current default when installed:

apis=[["/api","sqlchain.insight","do_API"],

["/bci","sqlchain.bci","do_BCI"],

["/rpc", "sqlchain.rpc","do_RPC"],

["/ws","","BCIWebSocket"],

["/","","do_Root"]]

Both of these new options were born out of specific needs I had for recent client work. If you want to build something on top of sqlchain and have needs that may also be useful to others please contact me or open a github issue to discuss them. If the feature has general application maybe I can work on adding it to sqlchain.